Imagine trying to plug your keyboard, mouse, camera, and phone into your laptop—back in the early 2000s, this meant different ports, weird drivers, and frustration. Then came USB, a universal standard that solved the chaos.

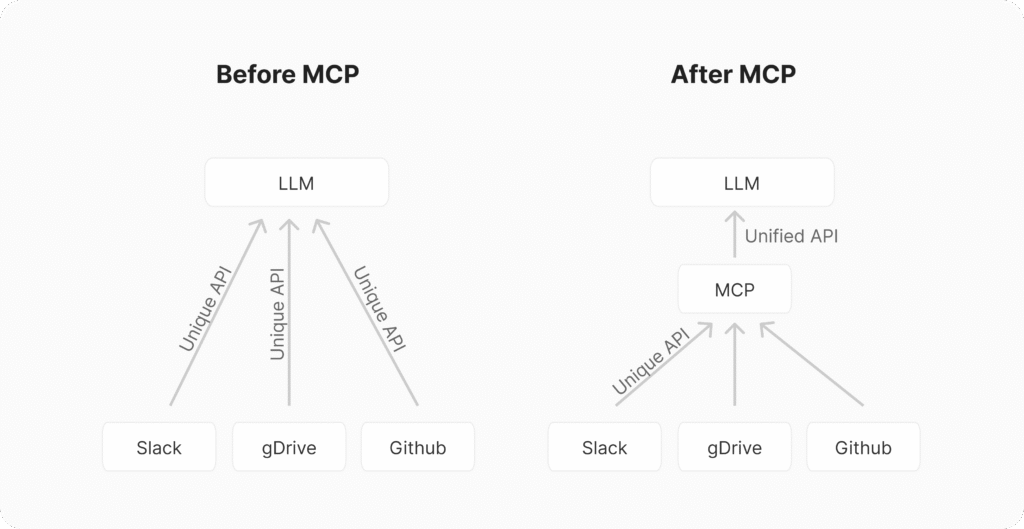

Now, imagine that same chaos in the world of AI applications: bots, assistants, IDE plugins, all trying to talk to external tools like GitHub, Slack, Asana, or your local database. Each AI app needs a custom integration for each tool. That’s M × N complexity—multiply the number of apps (M) with the number of tools (N), and you’ve got an integration nightmare.

That’s where Model Context Protocol (MCP) steps in.

🚀 What is MCP?

MCP is an open standard introduced by Anthropic to streamline how AI applications (like chatbots, IDE assistants, or custom agents) connect with tools, data, and services. Instead of each AI tool connecting to each external system individually, MCP simplifies this into an M + N problem.

In short:

- Apps build a single MCP Client each.

- Tool creators build one MCP Server per tool/service.

- Everyone communicates through a standardized API.

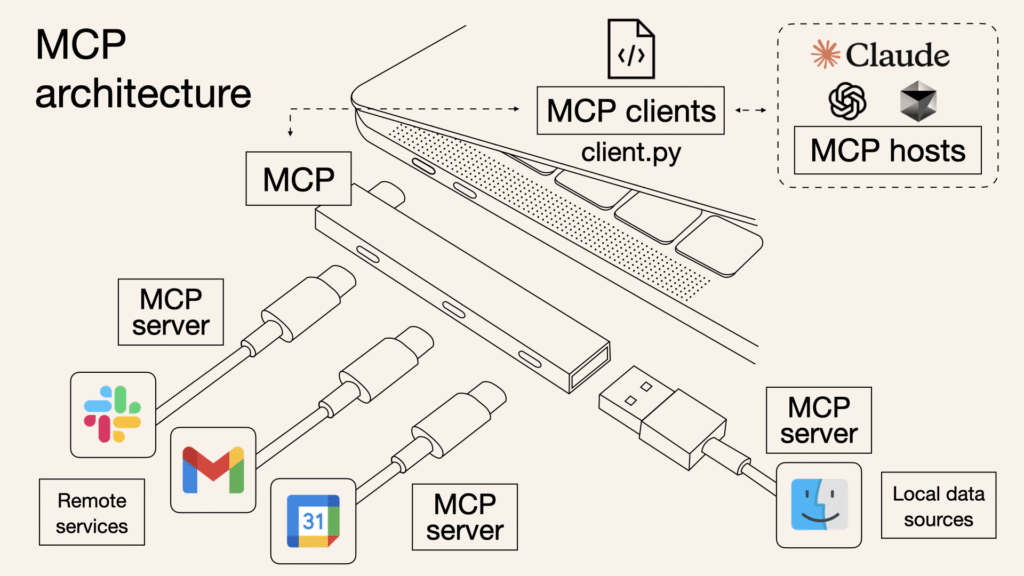

🔄 How MCP Works (The Architecture)

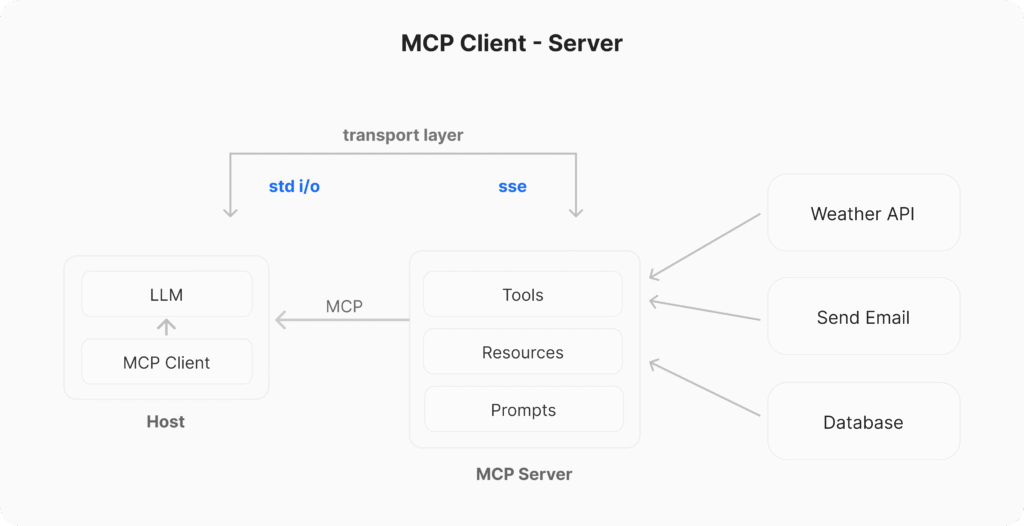

MCP follows a client-server model:

- Host: This is the AI application itself — like Claude, Cursor IDE, or a custom agent.

- Client: A component inside the host app that manages the connection to one MCP server.

- Server: An external service (like GitHub, Slack, or your filesystem) that exposes Tools, Resources, and Prompts to the AI.

Components of an MCP Server:

- Tools (Model-controlled): Actions that the AI can trigger (e.g., “get weather”, “fetch GitHub issues”).

- Resources (App-controlled): Data sources that the AI can pull from, like a REST API returning information.

- Prompts (User-controlled): Pre-defined templates to guide AI responses using Tools or Resources.

⚙️ How It All Comes Together: Step-by-Step

Let’s walk through what happens when an AI app starts:

- Initialization: Host app launches and spawns one MCP client for each tool it wants to talk to.

- Discovery: Clients connect to servers and ask, “Hey, what tools/resources/prompts do you have?”

- Context Setup: Host loads the available options and converts them into a format AI models understand (like JSON).

- Invocation: If the AI decides it needs info (like open GitHub issues), it uses a Tool via the client.

- Execution: Server performs the task (calls the GitHub API) and gets the result.

- Response: Server sends the data back to the client.

- Completion: Client sends the info to the Host, which updates the AI’s context so it can generate the final response for the user.

🛠️ Building Your Own MCP Server (Python Example)

Want to build an MCP server? Here’s a quick example using FastMCP in Python:

pythonCopyEditfrom fastmcp import FastMCP

mcp = FastMCP("Demo")

@mcp.tool()

def add(a: int, b: int) -> int:

return a + b

@mcp.resource("greeting://{name}")

def get_greeting(name: str) -> str:

return f"Hello, {name}!"

@mcp.prompt()

def review_code(code: str) -> str:

return f"Please review this code:\n\n{code}"

This server exposes:

- A tool to add numbers.

- A resource that returns a personalized greeting.

- A prompt to review code snippets.

🌍 Pre-built MCP Servers You Can Use

💬 Building MCP Clients

MCP Clients are embedded inside host applications. They handle:

- Server connection

- Tool/resource/prompt discovery

- Forwarding requests and returning results

Example using Python’s mcp package:

pythonCopyEditfrom mcp import ClientSession, StdioServerParameters

from mcp.client.stdio import stdio_client

server_params = StdioServerParameters(command="python", args=["example_server.py"])

async with stdio_client(server_params) as (read, write):

async with ClientSession(read, write) as session:

await session.initialize()

tools = await session.list_tools()

result = await session.call_tool("add", arguments={"a": 5, "b": 3})

print(result.content)

📈 Why MCP Is Gaining Serious Momentum

Since its launch, MCP has gone from an idea to an entire ecosystem. Here’s why developers and companies are jumping in:

✅ AI-Native Design

MCP isn’t retrofitting old API concepts. It’s made for LLM-based apps — treating tools as function calls and resources as dynamic context.

✅ Great Spec & Open Standard

Anthropic didn’t just publish a spec — they dogfooded it:

- Claude Desktop already runs on MCP.

- Reference servers exist for Git, Slack, local files, and more.

- Python, TypeScript, Java SDKs are available.

- MCP Inspector for testing & debugging.

✅ Built on LSP Concepts

MCP takes cues from the Language Server Protocol (LSP) — proven, scalable, and built on standards like JSON-RPC 2.0.

✅ Real Adoption

- Cursor, Windsurf, LibreChat all integrated it.

- Composio released 100s of ready-to-use MCP servers.

- OpenAI announced MCP support.

- Thousands of community-built integrations already live.

🤖 Real-World Example: Gemini + MCP + Airbnb Server

Let’s say you’re building an AI agent using Google’s Gemini and want to connect to an Airbnb server via MCP:

pythonCopyEditfrom google import genai

from mcp import ClientSession, StdioServerParameters

from mcp.client.stdio import stdio_client

server_params = StdioServerParameters(

command="npx",

args=["-y", "@openbnb/mcp-server-airbnb"]

)

# Use Gemini to generate and execute tool calls via MCP

async with stdio_client(server_params) as (read, write):

async with ClientSession(read, write) as session:

await session.initialize()

tools = await session.list_tools()

# Use tools in Gemini prompt generation...

🎯 Final Thoughts

MCP isn’t just another protocol—it’s the missing glue between powerful AI models and the tools/data they need to interact with. It simplifies architecture, accelerates integration, and fosters a growing community of AI builders.

Whether you’re building chatbots, IDE tools, or smart agents, MCP is worth exploring—and it just might become the new standard for how AI talks to the world.

Watch the video for the reference:

GIPHY App Key not set. Please check settings