Introduction to Load Balancing

Load balancing is a crucial component of System Design, as it helps distribute incoming requests and traffic evenly across multiple servers. The main goal of load balancing is to ensure high availability, reliability, and performance by avoiding overloading a single server and avoiding downtime.

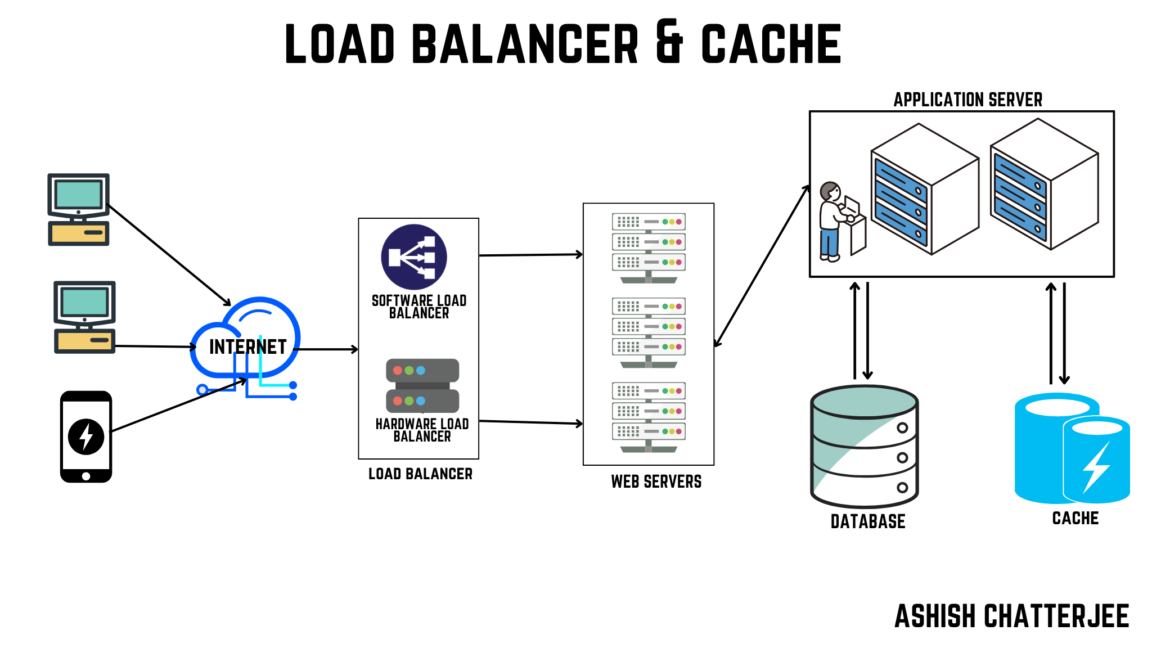

Typically, a load balancer sits between the client and the server accepting incoming network and application traffic and distributing the traffic across multiple backend servers using various algorithms. By balancing application requests across multiple servers, a load balancer reduces the load on individual servers and prevents any one server from becoming a single point of failure, thus improving overall application availability and responsiveness.

Key characteristics of Load Balancers

Below are some of the Key characteristics of Load Balancers:

- Traffic Distribution: To keep any one server from becoming overburdened, load balancers divide incoming requests evenly among several servers.

- High Availability: Applications’ reliability and availability are improved by load balancers, which divide traffic among several servers. The load balancer reroutes traffic to servers that are in good condition in the event that one fails.

- Scalability: By making it simple to add servers or resources to meet growing traffic demands, load balancers enable horizontal scaling.

- Optimization: Load balancers optimize resource utilization, ensuring efficient use of server capacity and preventing bottlenecks.

- Health Monitoring: Load balancers often monitor the health of servers, directing traffic away from servers experiencing issues or downtime.

- SSL Termination: Some load balancers can handle SSL/TLS encryption and decryption, offloading this resource-intensive task from servers.

With or without load balancer

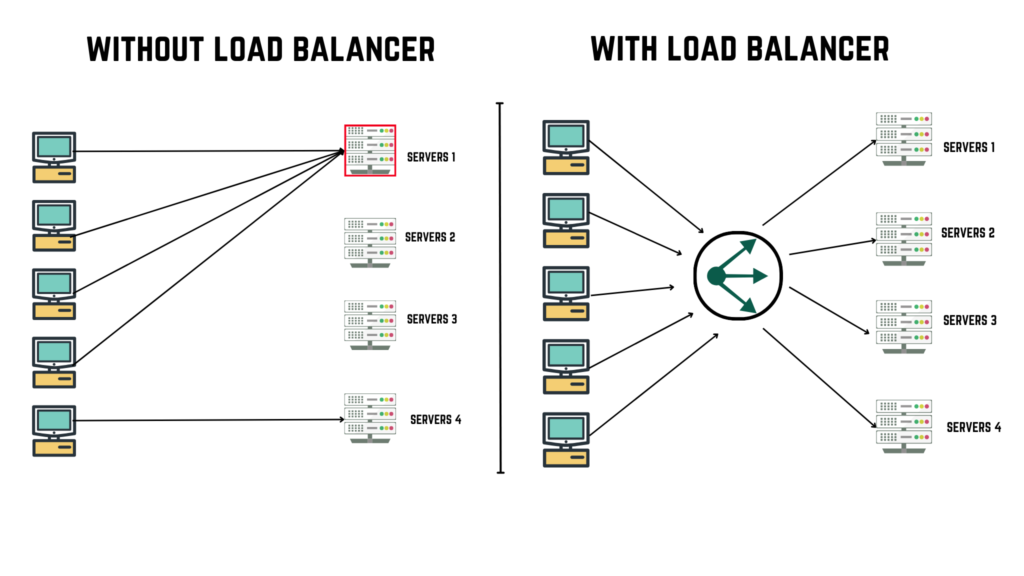

What will happen if there is NO Load Balancer?

Before understanding how a load balancer works, let’s understand what problem will occur without the load balancer through an example.

- Single Point of Failure:

- If the server goes down or something happens to the server the whole application will be interrupted and it will become unavailable for the users for a certain period. It will create a bad experience for users which is unacceptable for service providers.

- Overloaded Servers:

- There will be a limitation on the number of requests that a web server can handle. If the business grows and the number of requests increases the server will be overloaded.

- Limited Scalability:

- Without a load balancer, adding more servers to share the traffic is complicated. All requests are stuck with one server, and adding new servers won’t automatically solve the load issue.

Types of Load Balancers

1. Hardware Load Balancers

These are real devices that are set up within a data center to control how traffic is distributed among servers. They are highly reliable and work well since they are specialized devices, but they are costly to purchase, scale, and maintain. They’re often used by large companies with consistent, high traffic volumes.

2. Software Load Balancers

These are software or programs that divide up traffic among servers. They operate on pre-existing infrastructure (on-premises or in the cloud), in contrast to hardware load balancers.

- Because software load balancers make it simple to modify resources as needed, they are more scalable and less expensive.

- They are adaptable and appropriate for a range of businesses, including ones that use the cloud.

3. Cloud Load Balancers

Cloud load balancers, which are offered as a service by cloud providers like AWS, Google Cloud, and Azure, automatically distribute traffic without requiring physical hardware. Users just pay for the resources they use, and they are very scalable. They are perfect for dynamic workloads since they can readily interface with cloud-based apps and adjust to traffic spikes.

Load balancer algorithms

- Round robin ( Sequential request distribution )

- Weighted round robin (Handle servers with different processing capacities)![file]

- Least connection (request sent to the least used server in the network)

- IP Hash (Request sent to the server based on client IP)

L4 vs L7 Load Balancing:

Layer 4 load-balancer takes routing decision based on IPs and TCP or UDP ports. It has a packet view of the traffic exchanged between the client and a server, which means it, takes decisions packet by packet. The layer 4 connection is established between the client and the server.

Layer 7 load balancing redirects traffic more intelligently by inspecting content to gain deeper context on the application request. This additional context allows the load balancer to not only optimize load balancing but also to also rewrite content, perform security inspections and to implement access controls

GIPHY App Key not set. Please check settings